Cyber techniques support real-world material design

Multiple teams of Georgia Tech researchers are utilising cyber techniques to support accelerated materials design. Here are a few of the innovative efforts underway by research teams that include engineers, chemists, physicists, computer scientists, and others.

A specialist in quantum chemistry, Professor David Sherrill is streamlining the materials analysis process by developing improved methods for studying atomic-scale chemistry. He is shown in the School of Chemistry and Biochemistry’s computer cluster.

ADVANCING MOLECULAR MODELING

A major goal for materials science and engineering involves more accurate understanding of material structures and properties and how they influence one another. Such knowledge makes it easier to predict which real-world properties a theoretical material would possess when realised.

Currently, researchers use computers to delve into materials structures using two approaches. The first relies on experimental data, derived from examining actual materials using microscopy, spectroscopy, X-rays, and other techniques. This data is plugged into computer models to gain insight on materials behavior.

The second approach involves models based on “first principles” methods. Such models are developed by pure computation, utilising established scientific theory without reference to experimental data. Such “ab initio” or “physical” models are widely regarded as useful, but not necessarily fully accurate, when approximations are made to speed up the computation process.

Materials researchers strive to balance and integrate the experiment-based methodology with the theoretical approach. Investigators continually compare one type of result to the other in the drive to obtain accurate insights into materials structures.

Professor David Sherrill is a specialist in quantum chemistry in Georgia Tech’s School of Chemistry and Biochemistry. He is working to streamline the materials analysis process by developing improved methods for studying atomic-scale chemistry.

“The dream is that if you had truly accurate and predictive models, you would need much less on the experimental side,” Sherrill said. “That would save both time and money.”

Sherrill and his research team have made progress toward more definitive physical models. They’ve demonstrated that cutting-edge computing techniques can produce highly accurate physical models of the interior forces at work in a molecule.

With funding from the National Science Foundation, Sherrill’s team studied crystals of benzene, a fundamental organic molecule. In a proof-of-concept effort, they developed advanced analytic software that supports parallel processing on supercomputers — making possible the high level of computational power required for modeling molecules.

The team’s efforts culminated in a benzene model that was deemed singularly accurate. That success showed it was indeed possible to compute nearly exact crystal energies — also called lattice energies — for organic molecules.

“The work demonstrates that first-principles methods can be made accurate enough that you can rely on the energies calculated,” Sherrill said. “Based on that data, you can then go on to derive accurate material geometries and properties, which is what we really need to know.”

The Sherrill team is part of a multi-institution group that is developing a program called Psi4, an open-source suite of quantum chemistry programs that exploits first-principles methods to simulate molecular properties. Sherrill’s team used a version of Psi4 in its analysis of benzene.

Sherrill, a member of Georgia Tech’s Center for Organic Photonics and Electronics (COPE), believes the modeling capabilities demonstrated in the benzene project will lead to better predictive techniques for other organic molecules. “I’m very excited about this advance in quantum chemistry,” he said. “I believe in a few years we’ll be doing highly accurate physics-based calculations of molecular energetics routinely.”

Professor Richard Neu is developing new materials that can withstand the extreme temperatures in jet engines and gas turbines. Key to his work is understanding how atoms diffuse through materials under varying conditions. He is shown in the intake of a jet engine at the Delta Flight Museum in Atlanta.

MODELING SUPERALLOY PERFORMANCE

Richard W. Neu, a professor in Georgia Tech’s George W. Woodruff School of Mechanical Engineering, develops materials that can withstand the extreme temperatures in jet aircraft engines and energy-generating gas turbines. With funding from the U.S. Department of Energy and multinational corporations, he investigates fatigue and fracture in metal alloy engine parts that are constantly exposed to heat soaring past 1,400ºC, as well as to continuous cycles of heating and cooling.

“We want engine parts to withstand ever-higher temperatures, so we must either improve existing materials or devise new ones,” Neu said. “The most effective way to do that is to understand the complex interactions of materials microstructures at the grain level, so we can vary chemical composition and get improved performance.”

Each grain is a set of atoms arranged in a crystal structure with the same orientation. The ways in which different grains fit together plays a major role in determining an alloy’s properties, including strength and ductility.

Key to Neu’s materials development work is understanding how atoms diffuse through materials under various conditions — a daunting assignment when more than 10 different elements are mixed together in a single alloy. To perform such studies, he turns to advanced cyber techniques that help him understand materials processes at atomic dimensions.

Vast computer databases, developed by materials scientists to describe the thermodynamics and mobility of the atoms in simple binary alloys, now offer critical insights into more complex alloy structures, Neu said. These data collections provide information about what’s taking place at both the microscale and mesoscale during long-term, high-temperature exposures.

Such databases consist of information developed either experimentally or through calculations based on first principles — basic physical theory. Using models built with this data, Neu and his team can understand how the strengthening phases within these grains change with exposure and can predict their impact on material properties.

Neu is using such modeling capabilities to improve nickel-based superalloys — high-performance metals used in the hottest parts of turbine engines. Even small increases in the temperature tolerance of these alloys can result in important performance gains.

He’s also investigating more novel materials including refractory metals such as molybdenum. Although molybdenum alone breaks down at high temperatures through oxidation, when combined with silicon and boron it can produce an alloy that may offer heat-tolerance increases of 100ºC or more.

UTILISING INVERSE DESIGN

When a material is manufactured, the necessary processing can change it at the molecular level. So an alloy or other material that once appeared well suited to a given use can be internally flawed by the time it’s a finished product.

Professor Hamid Garmestani of Georgia Tech’s School of Materials Science and Engineering is investigating this endemic problem. Working with Professor Steven Liang of the School of Mechanical Engineering and researchers from Novelis Inc., Garmestani is using an approach called inverse process design to pick out better candidate materials. The effort is funded by the Boeing Company.

Garmestani’s methodology starts by examining a material’s actual microstructure at the end of the manufacturing cycle. It then employs a reverse engineering approach to see what changes would enable the material to better withstand manufacturing stresses.

The Garmestani team analyses a finished part from the standpoint of its properties. If its post-processing microstructure no longer has the right stuff to perform required tasks, the researchers think in terms of a better starting material.

“Our approach is the inverse of what’s done conventionally, where you look for a material with the desired properties and work forward from there,” Garmestani said. “We start from the final microstructure and work back to the initial microstructure. If we know what an optimal final microstructure would look like, then we can figure out what the initial microstructure would have to be in order to get there.”

To achieve this, Garmestani develops microscale computer representations of the final material microstructure and the initial microstructure. He also considers the process parameters that a material undergoes during manufacturing, plugging in data on forging, machining, coating, and other processes that can affect a material internally.

By representing materials at the level of grains — interlocking clumps of molecules —Garmestani can compute the effect of specific processing steps on the microstructure. The distribution of grains — and their interrelationship with myriad tiny defects and other features — is key to determining a material’s properties.

Utilising a mathematical framework, the researchers digitise a material at the micron scale and even at the nanoscale to trace the minute effects of each manufacturing phase. That data helps track the role of every step in altering the distribution of internal features.

“As the microstructure changes, we can predict what the properties would be depending on how the constituents and the statistics of the distribution changes,” Garmestani said. “In this way we can optimise the process or the microstructure or both, and find that unique initial microstructure which is best suited to the needs of the designer or the manufacturer.”

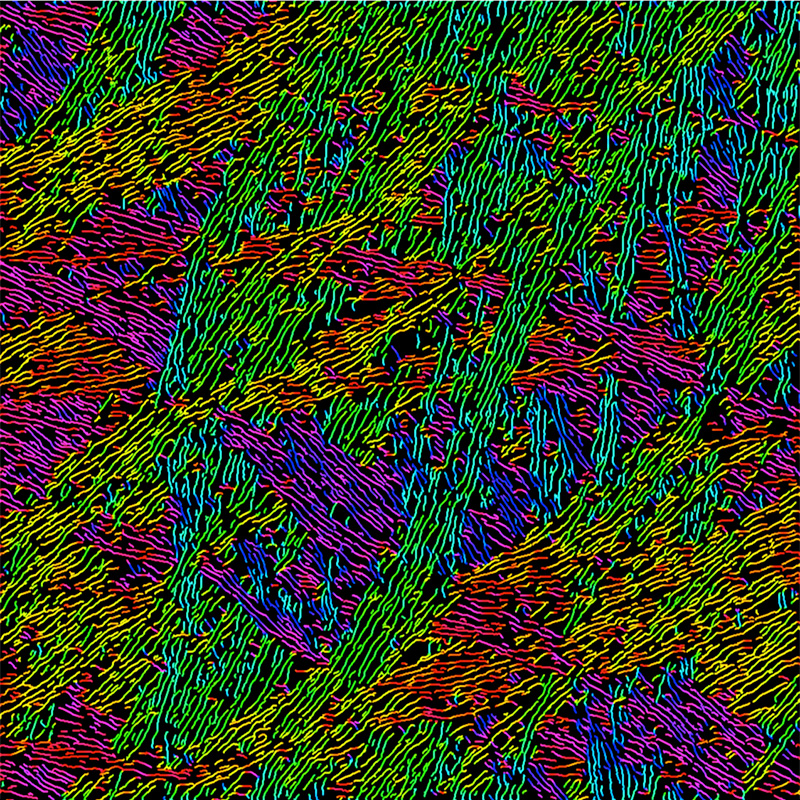

This image shows the alignment of polymer nanofibers that enable the fabrication of high-performance flexible electronic devices. In this processed microscopy image, fibers’ orientations are color coded to help researchers analyse their structure and alignment. The research team includes Professors Martha Grover and Elsa Reichmanis, and graduate research assistants Michael McBride and Nils Persson, all from the School of Chemical and Biomolecular Engineering.

DEVISING EXPERIMENTATION STRATEGIES

Martha Grover, a professor in Georgia Tech’s School of Chemical and Biomolecular Engineering (ChBE), is studying how to structure real-world experiments in ways that best support materials development. Grover and her team are developing specific experimentation strategies that optimise the relationship between the experimentalists who gather data and the theoretical modelers who process it computationally.

“What’s needed is a holistic approach that uses all the information available to help materials development proceed smoothly,” Grover said. “Considering the objectives of both experimentalists and modelers helps everybody.”

Grover and her group are using an approach known as sequential experimental design to tackle this issue. Working with Professor Jye-Chyi Lu of Georgia Tech’s H. Milton Stewart School of Industrial and Systems Engineering (ISyE), Grover has developed customised statistical methods that allow collaborating teams to judge how well a given model fits the available experimental data and at the same time create models that can help design subsequent experiments.

For instance, in one project, Grover worked with graduate student Paul Wissmann to optimise the surface roughness of yttrium oxide thin films. The work required costly experiments aimed at learning the effects of various process temperatures and material flow rates.

After gathering initial experimental data, the researchers built a series of models using an eclectic approach. On the one hand, they created empirical models of the thin-film deposition process using data from the experiments. But they also developed another set of models using algorithms based solely on first principles — science-based physical theory requiring no real-world data.

Then, using a computational statistics method, they combined the two modeling approaches. The result was a hybrid model that offered new insights and also let the researchers limit the number of additional experiments needed.

“Tailored statistical methods provide us with a systematic type of decision-making,” Grover said. “We can use statistics to tell us, given the data that we have, which model is most likely to be true.”

Grover is currently working with ChBE Professor Elsa Reichmanis, an experimentalist studying organic polymers. Their current project involves finding ways for printing organic electronics for potential use in roll-to-roll manufacturing. This technique could provide large numbers of inexpensive flexible polymer devices for applications from food safety and health sensors to sheets of solar cells.

The collaborators are using sequential experimental design approaches as they investigate organic polymer fiber structures at both nanoscales and microscales using atomic force microscopy.

“Tight coupling of modeling and experimentation is helping us to develop optimal fiber size and arrangement, and to examine the hurdles involved in scaling up production processes,” Grover said.

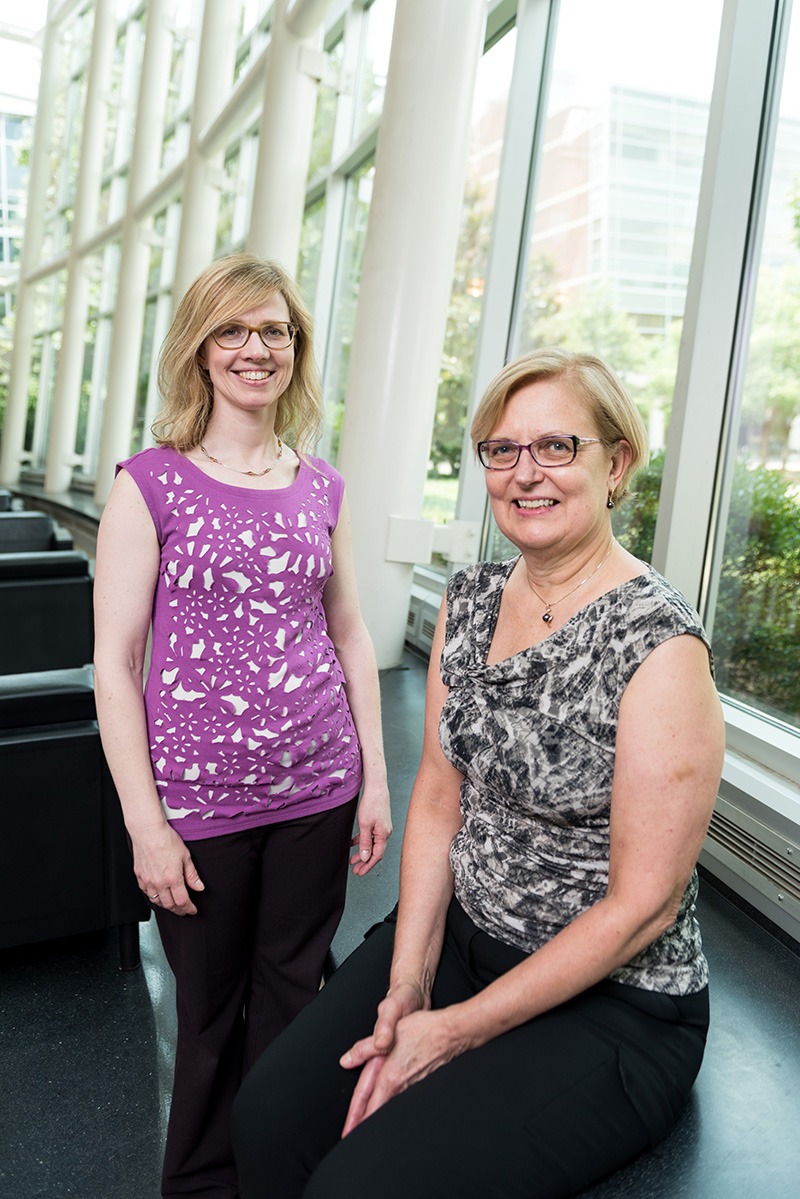

Professors Martha Grover and Elsa Reichmanis are using sequential experimental design techniques to develop new ways of printing organic electronics. Both are professors in the School of Chemical and Biomolecular Engineering.

PINPOINTING MATERIALS CANDIDATES

Finding an optimal material from thousands of candidates is a challenging job. It requires a blend of human expertise and computational power to make it happen.

David Sholl, who holds the Michael E. Tennenbaum Family Chair in the School of Chemical and Biomolecular Engineering (ChBE), works two sides of the materials selection challenge. He and his team use predictive computer models to find candidate materials for specific applications, and at the same time they focus on continually improving the computational techniques they’re using.

Each project requires the team to painstakingly develop a large database of possible materials for a target application, drawing on existing materials information. Then the researchers use computer models to validate this collected data against both experimental findings and first-principles physical analysis. The result is numerical calculations that provide information on materials structures down to the molecular level.

“It isn’t a case of giving the computer a list of materials and having it do everything,” said Sholl, who is also a Georgia Research Alliance Eminent Scholar. “We have to put together a list of thousands of potential materials, and then we examine and verify the existing data on those materials. Only then can we do a staged series of calculations to look for materials with the key properties needed for a particular application.”

Sholl describes this calculation process as a “nested approach.” His team screens large numbers of materials using a simplified set of approximations that are amenable to high-throughput processing on supercomputers. What’s left are candidates of particular interest, which are then evaluated more closely.

He and his team are currently working on several materials selection projects sponsored by the Department of Energy. These include finding the best materials to eliminate contaminants from natural gas; developing materials for capturing carbon dioxide from the atmosphere and industrial smokestacks; and making high-performance membranes to separate chemicals.

Sholl believes that in the next few years emerging data science methodologies will help his team streamline what is in many ways a big data challenge. More efficient methods could mean less time spent preparing and validating materials data, and more time spent evaluating the likely candidates.

“Here at Georgia Tech I’m closely involved with people developing various kinds of materials, who are keenly focused on scaling them and integrating them into actual technologies,” he said. “That relationship is always pushing me to think about how to use my team’s calculations to help develop real-world applications, rather than just producing lots of information.”

Le Song, an assistant professor in Georgia Tech’s School of Computational Science and Engineering, is using machine-learning techniques to investigate organic materials that could replace inorganic materials in solar cell designs. He is shown with a photovoltaic array at Georgia Tech’s Carbon Neutral Energy Solutions Laboratory.

SPEEDING BIG DATA ANALYSIS

The sheer volume of available data can make it challenging to find key information. Even supercomputers can take months to mine massive datasets for useful answers.

Le Song, an assistant professor in Georgia Tech’s School of Computational Science and Engineering, is tackling big data challenges related to materials development, with support from the National Science Foundation. Advanced processing techniques, he explained, can speed up the screening of thousands of theoretical materials created by computer simulation, decreasing the time between discovery and real-world use.

As elsewhere in materials science, Song deals with the complex interplay of computer simulations based on first-principles physics theory versus the computational analysis of data from laboratory experiments.

Part of his work involves using machine-learning approaches that delve deep into first-principles models to identify materials candidates. Machine learning, sometimes known as data analytics or predictive analytics, uses advanced algorithms to automate the evaluation of patterns in data.

For example, in one project, Song is investigating organic materials that could replace inorganic materials in solar cell designs. He rejected creating complex original models of each hypothetical material using quantum mechanical scientific theories of organic molecules, a lengthy and expensive computational undertaking.

Instead, he’s using molecular datasets that are already available, along with custom machine-learning techniques, to create predictive models in just hours of computer time. Despite this simplified approach, these models can accurately link a candidate material’s structure to its potential properties.

Song makes use of the parallel computing capabilities of graphics processing units to help reduce the time needed for computation. These relatively inexpensive high-speed devices are suited to demanding computational tasks in fields that include neural networks, modeling, and database operations.

In other research, Song avoids first-principles approaches and utilises hard data from experiments. Working with images of metals for aircraft applications, he’s examining alloys at the grain level — clumps of molecules — to develop information on how a material’s microstructure is linked to specific desirable properties.

To support their work, Song and his team use a range of deep learning techniques such as convolutional neural networks. This advanced deep learning architecture emulates the neuron organisation found in visual cortexes to help analyse images, video, and other data. The technology can automate the extraction of important features that help guide the materials-analysis process, lessening the need for human involvement.

SIMPLIFYING COMPUTATIONAL MATERIALS DESIGN

Andrew Medford is focused on developing materials informatics techniques to find novel materials more quickly. He is working with machine learning, probability theory, and other methods that can locate key information in large datasets and point the way to promising new formulations.

Highly accurate materials analysis is now available, thanks to density functional theory, quantum chemistry, and other advanced techniques, he explained. These approaches use first-principles physical methods to calculate the properties of complex systems at the atomic scale.

But these approaches have two drawbacks, said Medford, a postdoctoral researcher in the School of Mechanical Engineering (ME) who works with ME Professor Surya Kalidindi and will join the School of Chemical and Biomolecular Engineering faculty in January. First, they require large amounts of computational time; second, they produce huge datasets where critical information can be hard to find.

“So the next step is learning how to fully exploit the data we generate,” Medford said. “Hundreds of thousands of different potential compounds might work for a specific application, and performing complete calculations on more than a select few isn’t possible.”

The key, he said, is finding ways to use existing materials-related data, along with novel informatics approaches, to more effectively search “high dimensional” problems — datasets that contain many different materials attributes. Developing the right data science techniques is critical to this effort, including better ways to generate, store, and analyse datasets with effective big data techniques, and better ways to organise collaborating communities of researchers to help build materials databases.

One major issue involves integrating the sheer variety of available technologies, including various types of datasets, computational methods, and data storage systems. Also needed are more effective ways to predict the accuracy of the data being generated; for example, improving data processing techniques, such as uncertainty quantification, used to gauge information dependability.

One typical challenge, Medford said, involves a highly important class of materials: catalysts used to process synthetic or bio-derived fuels. Investigators must screen fuel compounds and biomass precursors so complex that calculating properties for even a single potential reaction pathway is computationally overwhelming.

The good news is that the fundamental chemistry of these systems involves only a few basic organic elements — carbon, hydrogen, and oxygen — which bond in a limited number of ways. This insight could simplify the high dimensional informatics challenge involved in finding candidate materials.

“Novel data-driven approaches can reduce the complexity of these systems into a few key descriptors,” Medford said. “That can provide a route to rapid computational screening of potential materials for synthetic fuel catalysts and help bring more effective processing methods to industry much faster.”

One thing is certain: The Material Genome Initiative is important to U.S. economic development, and cyber-enabled materials have a key role to play in that effort. Georgia Tech research teams will continue to research and develop ways to reduce the time and cost involved in moving advanced materials from the supercomputer and the laboratory to real-world applications.